Automating calendars for small newsrooms

Scrapers, sheets and simple tasks

The less time journalists spend on rote tasks like data entry, the better. Yet for smaller newsrooms, automating these processes may seem like a daunting task. However, with just a little coding ability, you can write scrapers, spreadsheet macros and more, giving you the freedom to spend more time doing more meaningful work.

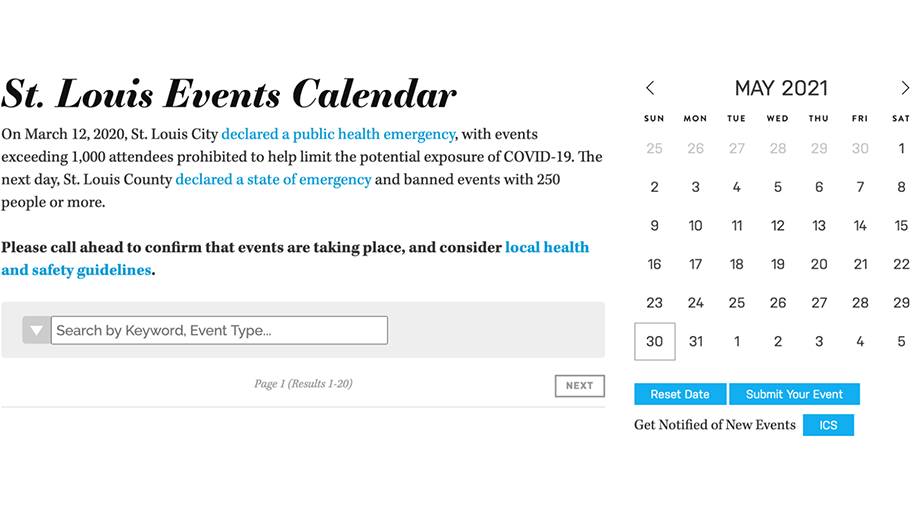

In this project we automated collecting event information for St. Louis Magazine’s website’s event calendar.

This calendar aims to be a central hub where people can learn about events in the St. Louis area. Manual collection of these events used to require a magazine employee scouring websites for hours to track down new exhibitions and activities, and then transferring all that event information into the St. Louis Magazine calendar system. We wanted to help them free up their human resources for other projects — while still populating their event calendar for readers.

There were around 30 different websites that St. Louis Magazine regularly pulled events from, so we knew this project would involve writing quite a few different scrapers. While we considered writing these in Python and hosting the scrapers on AWS Lambda (as we have for other projects), because we wanted the scrapers to run indefinitely, the costs would likely add up over time. Note: AWS does offer a limited free tier that is useful for smaller projects.

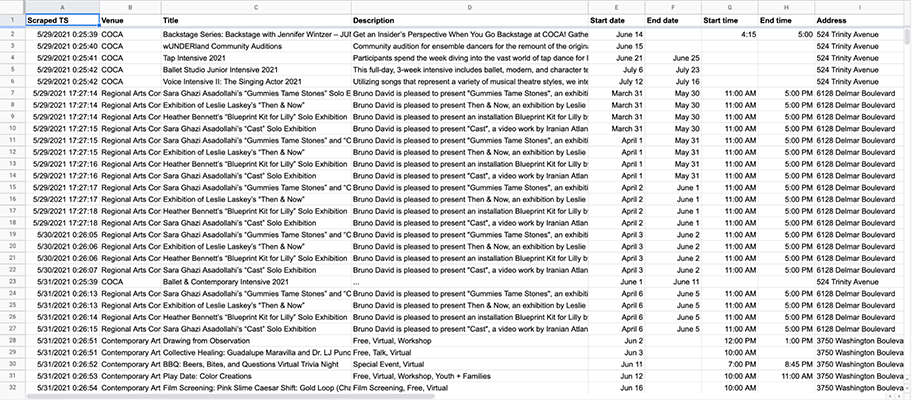

We also wanted to collect all of the scraped events into a Google Sheet so that their journalists could review and interact with the data if needed, which is how we decided to use Google Apps Script.

Google Apps Script provides a simple, free JavaScript cloud development platform. It integrates seamlessly with other Google workspace services, allowing you to integrate tools you already use daily. For example, Apps Scripts could be used to write macros or custom functions for Google Sheets, adding additional functionality or enabling automation of certain tasks.

So, instead of dealing with Google’s API, we attached all of the scripts directly to the spreadsheet and made them scrape the event data into new rows. We used time-based triggers, opting to run all of the scrapers once per day to find new events and bring them into the calendar event spreadsheet. This was based on what the magazine wanted – you can also create triggers based on user interactions with Google workspace services, such as when a document is opened, if you prefer more or less runs of your scrapers.

You can also install external libraries to use in your project. By using these, you’re reusing code other developers have already written which can speed up the development process. However, as Google warns, including external libraries can slow down execution of your code significantly, so in situations where runtime speed is important, other libraries should be used sparingly.

For this project, we used the Cheerio library to aid with HTML DOM parsing, as speed wasn’t necessarily critical (and because Google Apps Script is free to use, cost of longer execution wasn’t a concern). We were able to make requests to different web pages using the built in UrlFetchApp service.

Now, multiple scrapers are working once a day to add new events to the St. Louis Magazine spreadsheet which saves them hours of time on the internet gathering information! This simple automation will continue as long as they need it — freeing up precious time to spend on other projects.